What is TOPS in NPU?

AI technology has rapidly evolved, demanding higher computational power for complex tasks. However, traditional CPUs and GPUs struggle to meet these demands efficiently. Enter AI TOPS and NPUs, specialized technologies designed to revolutionize AI performance and efficiency.

TOPS (Tera Operations Per Second) is a measurement of the potential peak AI inferencing performance based on the architecture and frequency required of the NPU. NPUs, or Neural Processing Units, are specialized processors designed to handle AI tasks more efficiently than traditional CPUs and GPUs.

What is the Difference Between NPU and GPU TOPS?

GPUs, with a high number of TOPS, handle a wide range of tasks simultaneously, including graphic operations and AI at various precisions. NPUs, despite having fewer TOPS, are optimized to efficiently perform specific AI operations, often using lower precisions.

| Feature | NPU | GPU |

|---|---|---|

| Task Specificity | Optimized for specific AI tasks | Handles a wide range of tasks |

| Precision | Often uses lower precisions | Supports various precisions |

| Efficiency | Higher for specific AI operations | Lower for AI, higher for graphics |

Key Features of NPUs

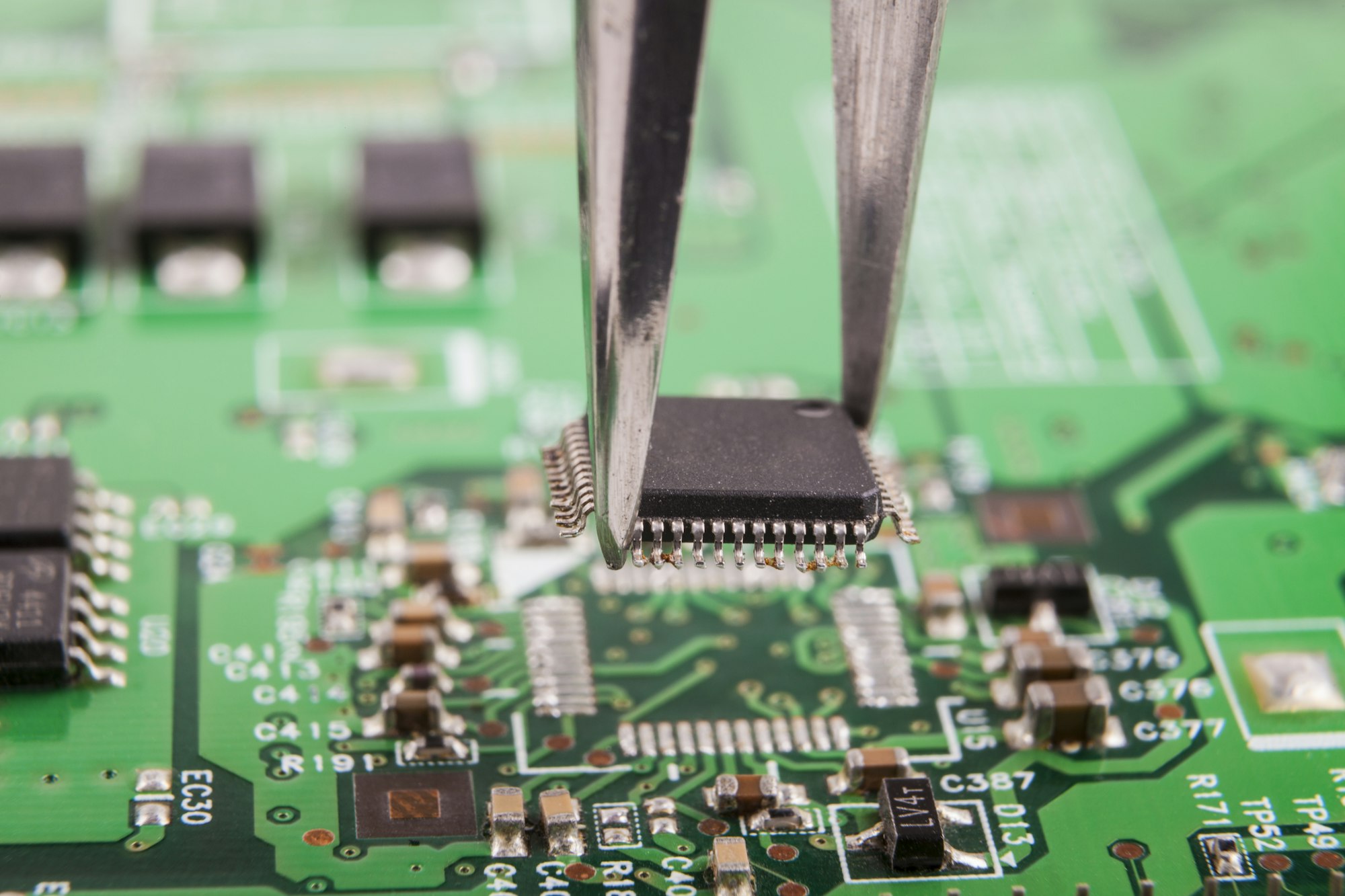

- Optimized for Machine Learning: NPUs are specifically designed to accelerate neural network operations. They can handle tasks such as matrix multiplication and other deep learning computations much faster than general-purpose CPUs.

- High Throughput: NPUs can process a large number of operations per second, which is crucial for handling complex neural networks and large datasets effectively and efficiently.

- Energy Efficiency: One of the significant advantages of NPUs is their energy efficiency. They can perform AI computations at a lower energy cost compared to traditional processors, which is particularly beneficial for battery-powered devices like smartphones and tablets.

- Support for Various AI Frameworks: Many NPUs are compatible with popular AI frameworks and libraries, such as TensorFlow, PyTorch, and others, making them versatile for different AI applications.

- Customization and Flexibility: Some NPUs offer customizable architectures, which allow developers to tailor the hardware to better fit specific neural network requirements, enhancing performance for particular tasks.

AI TOPS Benchmarking and Comparison

Benchmarking AI TOPS is essential for comparing different AI processors. This process involves evaluating the performance of various NPUs and GPUs based on their TOPS values, helping to identify the best hardware for specific AI tasks.

| Processor | TOPS Value | Application |

|---|---|---|

| Qualcomm Snapdragon | 45 TOPS | Mobile AI |

| Intel Neural Compute | 48 TOPS | General AI Applications |

| Nvidia Xavier | 30 TOPS | Autonomous Vehicles |

The development of NPUs is paving the way for more advanced AI applications. These specialized processors are set to revolutionize various fields, from autonomous vehicles to healthcare, by providing the computational power needed for complex AI tasks.

Understanding AI TOPS and NPUs is essential for harnessing the full potential of AI technologies. By leveraging these specialized processors, we can achieve unprecedented levels of performance and efficiency in AI applications. Share your thoughts and experiences with AI TOPS and NPUs in the comments below.